Robust Visual Tracking via Structured Multi-Task Sparse Learning

Tianzhu Zhang

Bernard Ghanem

Si Liu

Narendra Ahuja

National Lab of Pattern Recognition

Institute of Automation, Chinese Academy of Sciences

Abstract

In this paper, we formulate object tracking in

a particle filter framework as a structured multi-task sparse

learning problem, which we denote as Structured Multi-Task

Tracking (S-MTT). Since we model particles as linear combinations

of dictionary templates that are updated dynamically,

learning the representation of each particle is considered a

single task in Multi-Task Tracking (MTT). By employing

popular sparsity-inducing �p,q mixed norms (specificallyp ∈

{2,∞} and q = 1), we regularize the representation problem

to enforce joint sparsity and learn the particle representations

together. As compared to previous methods that handle

particles independently, our results demonstrate that mining

the interdependencies between particles improves tracking.

|

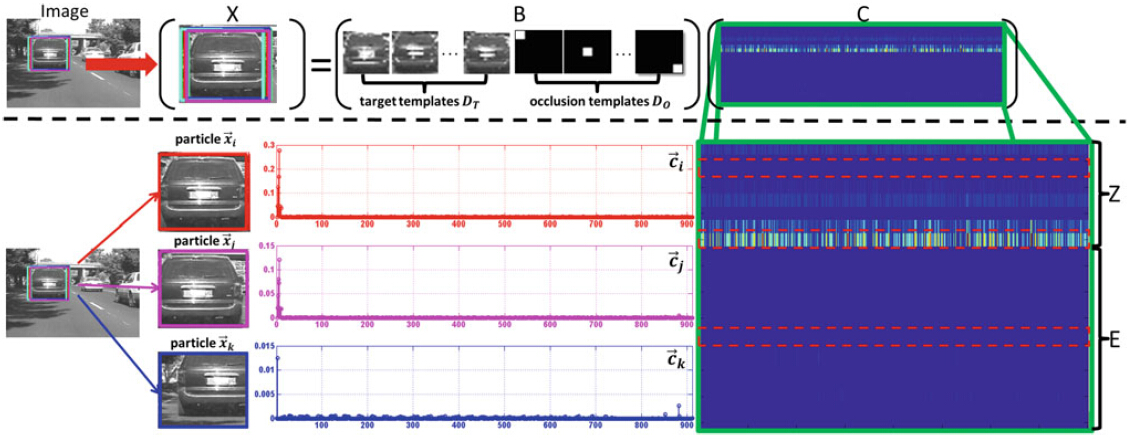

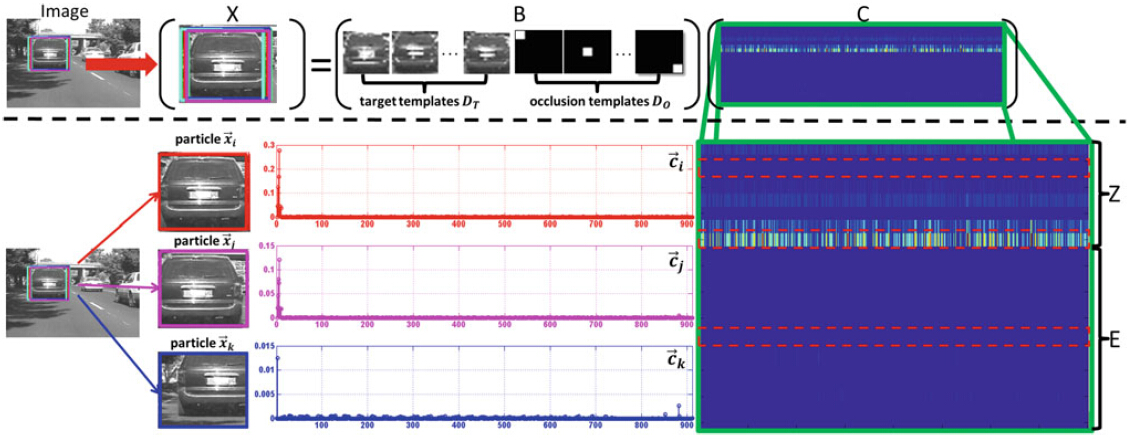

Figure 1 - (Color online) Schematic example of the L21 tracker. The representation C of all particles X w.r.t. dictionary B (set of target and

occlusion templates) is learned by solving Eq.(9) with p = 2 and

q = 1. Notice that the columns of C are jointly sparse, i.e. a few (but

the same) dictionary templates are used to represent all the particles

together. The particle xi is selected among all other particles as the tracking result, since xi is represented the best by object templates only. |

Video Results